The Pursuit of Truth: The Impact of CRESST’s Research

By John McDonald

November 14, 2025

To those uninitiated to the world of research, methodology, assessment, and evaluation, the work of the National Center for Research on Evaluation, Standards, and Student Testing (CRESST) at UCLA can be hard to understand. Concepts such as Bayesian Inference, and the fine-grained analysis of often large and complex data sets using advanced mathematics and psychometric and statistical methods can be hard to explain. The work can seem a little intimidating, even to other academic researchers.

But in some ways, CRESST’s work is simple. In 2015, after several years as co-director, Li Cai, a professor of education and psychology in the Advanced Quantitative Methodology program at the UCLA School of Education and Information Studies, was named director of CRESST.

“The essence of our work is that we are fearless in the pursuit and telling of the truth,” says UCLA Professor Li Cai, the director of CRESST. “We are fearless in pushing the boundaries of being rigorous and doing cutting-edge work. Our goal is to make education research, education science, better.”

CRESST is an internationally recognized research center with decades of groundbreaking experience in educational research. Its mission is to pioneer high-caliber research, develop cutting-edge assessments, and drive measurement innovation to elevate teaching and learning worldwide.

“For more than 50 years, CRESST has conducted groundbreaking research, spawning innovation that has shaped the way the fields of evaluation and assessment are thought of today,” says Christina Christie, Wasserman Dean of the UCLA School of Education and Information Studies, and an expert in evaluation.

CRESST was originally established at the UCLA School of Education in 1966 as the Center for the Study of Evaluation. Increasing access to high-quality education was a north star of President Lyndon B. Johnson’s “War on Poverty,” and the passage of the 1965 Elementary and Secondary Education Act fueled a rapidly emerging demand for evaluation of education. With funding from the U.S. Department of Health, Education, and Welfare, UCLA was selected to serve as the site of one of nine U.S. Office of Education research and development centers, setting forth a new effort to research theories and methods of analyzing educational systems and programs and conduct studies to evaluate their effects. UCLA Education Dean John Goodlad initially tapped Professor Merlin Wittrock to lead the effort, but he soon retired from UCLA. Under increasing pressure to name a leader, Goodlad turned to UCLA Emeritus Professor of Education Marvin Alkin – then a 32-year-old UCLA assistant professor – who had helped to organize and launch the center. Alkin became what many consider to be the founding director of the Center for Evaluation, leading the effort from its earliest groundbreaking years through the mid-1980s.

In a 1969 paper submitted to the U.S. Department of Health, Education and Welfare, Alkin wrote, “Inherent in the development of a model of educational evaluation is the development of a theory of evaluation. The development of such a theory of evaluation has been established as the major goal of the Center for the Study of Evaluation.” Alkin defined the center’s work as, “devoted exclusively to the study of the evaluation of educational systems and instructional programs.”

In 1975, UCLA Professor Eva Baker became director of the Center for the Study of Evaluation. Under her leadership, the center’s focus would shift to exploring the connection between assessment and teaching, incorporating the study of technology in evaluation and assessment.

In 1983, Baker helped to lead the formation of the National Center for Research on Evaluation, Standards, and Student Testing, CRESST. The new research partnership brought UCLA together with the University of Colorado at Boulder, Cornell University, Yale University, the American Federation of Teachers, the Educational Testing Service, the National Education Association, and other universities and research institutions to study research theories and methods for evaluating educational systems and programs, focusing on assessment and its connection to teaching and technology. The aim was to better the quality of education by developing scientifically based evaluation and testing techniques and using sound data for better decision-making and accountability. The Center for Evaluation ran combined with CRESST through the early 2000s, when the name changed to CRESST.

UCLA researcher Joan Herman would join with Baker as co-director of CRESST in 1983. A former schoolteacher and school board member, Herman’s work focused in part on the effects of testing on schools and the design of assessment systems to support school planning and instructional improvement.

CRESST would pursue what Baker described in an issue of Evaluation Comment as, “a continuing mission to improve educational assessment.” In the issue, she describes the goals and perspectives that shaped CRESST’s research program as follows: With a focus on the assessment of education quality, CRESST expects to study: (1) assessment that leads to improvement in teaching and learning; (2) assessment policy and large-scale practice; (3) improved technical knowledge about the quality of assessment; and (4) dissemination and outreach that successfully decreases the interval between research and practice. Baker added that the conceptual model that underlies the research program emphasizes societal impact as the goal and identifies four major domains: validity, fairness, credibility, and utility.

Over the years, that framework helped to guide an ambitious agenda of research generating dozens if not hundreds of research projects exploring evaluation and assessment and shaping education practice and policy. In addition to work with schools and teachers, those efforts included an initiative funded by the Institute of Education Sciences in 2007 to study evaluation and assessment in educational gaming, creating the Center for Advanced Technology in Schools. CRESST would partner with the Smarter Balanced Assessment Consortium in 2010, receiving a national Race to the Top Award that funded work across 30 states to co-create a new system of educational assessments. This year, CRESST has continued to push the envelope, contributing to the “Handbook for Assessment in the Service of Learning,” a new open-source resource offering a theoretical and research-grounded vision for transforming educational assessment into a catalyst for learning.

In 2015, after several years as co-director, Li Cai, a professor of education and psychology in the Advanced Quantitative Methodology program at the UCLA School of Education and Information Studies, was named director of CRESST. A statistician whose research encompasses educational and psychological measurement, computational statistics, and research design, Cai leads an interdisciplinary team of experts in an ongoing exploration intentionally and intently focused on fields of assessment, evaluation, technology, and learning.

“Our projects, they are always inspired by real problems,” says Cai. “Our methodological questions require math, they require lots of statistics, they require new ideas and ingenuity, but essentially they are inspired research that serves specific purposes.”

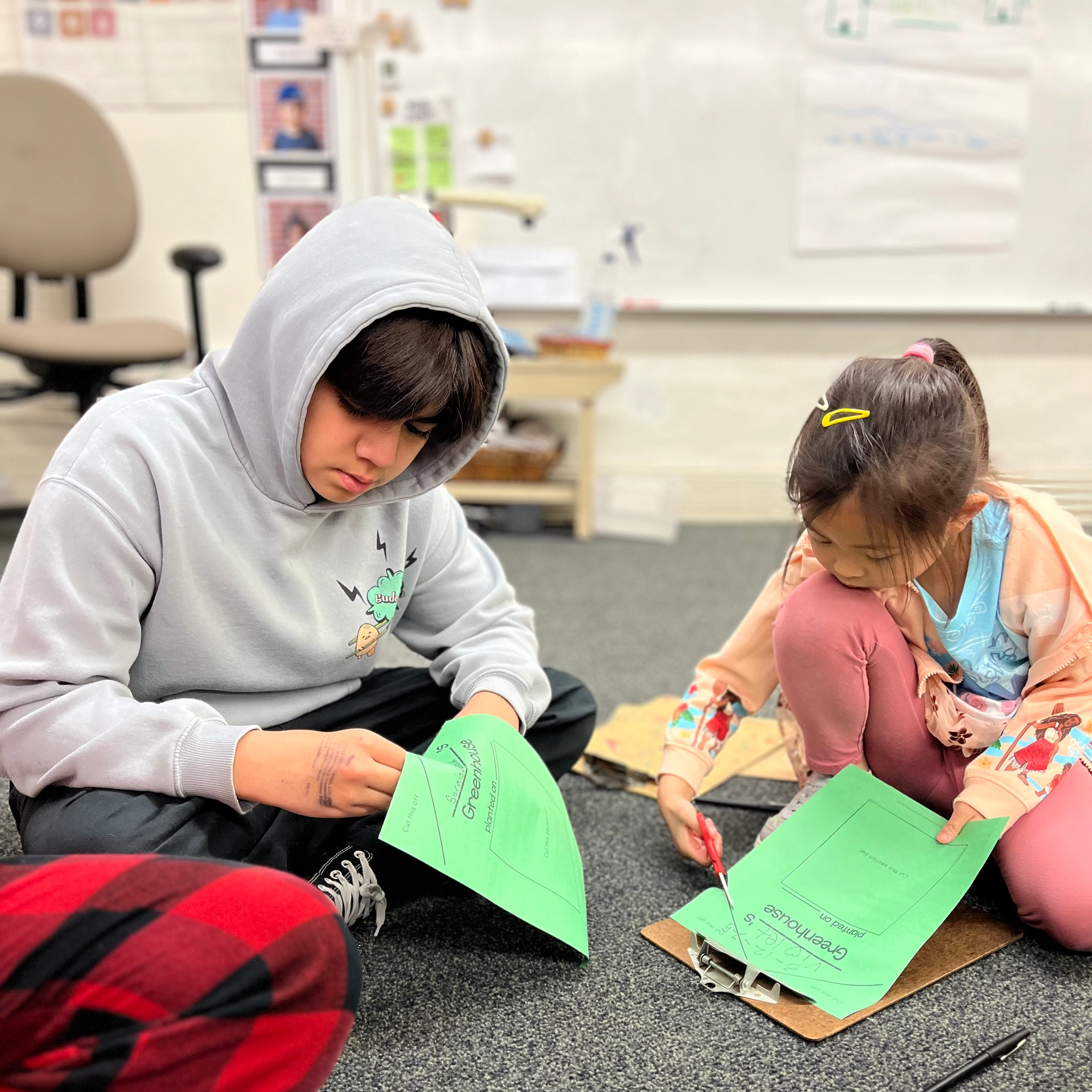

Among the many efforts are those such as a partnership between PBS KIDS and CRESST to advance the effectiveness of the PBS Ready to Learn program. The program uses television, the internet, and other platforms to help millions of young children—especially those in poverty—acquire essential reading, math, and science skills. CRESST developed the Learning Analytics Platform—a sophisticated technology system—to track and evaluate children’s learning processes across different media, meticulously recording the interactions from tens of thousands of children every month, providing a rich dataset for analysis. CRESST created a telemetry format–a standardized method of collecting data and organizing data–to capture detailed learning interactions, providing an in-depth understanding of the instructional, assessment, and interactive properties of each game. Drawing on their background in game design and game data analysis, the CRESST team developed methodologies to measure and analyze children’s learning processes. Their research showed that the platform can effectively assess and predict mathematics performance. By analyzing gameplay data, CRESST determined which aspects of the games were most effective for learning, enabling PBS KIDS to refine their content for better educational outcomes.

CRESST has done significant work to better understand and address the needs of English language learners and the educators who serve them.

In 2016, CRESST brought the “English Language Proficiency Assessment for the 21st Century” program in-house to devise a groundbreaking, comprehensive assessment system for English learners. Known as ELPA21, the system is designed to improve outcomes for English learners (ELs) and support states in meeting federal requirements.

ELPA21 provides valid, reliable measures of EL students’ progress toward English language proficiency through assessments grounded in English Language Proficiency standards. The ELP Standards provide a framework for English learners to acquire the content knowledge and English proficiency needed for school success. ELPA21 has also developed assessments for English learners, including a Dynamic Screener for initial identification of English learners, a Summative Assessment measuring annual English language proficiency, and Alternative ELPA Screeners and Summative Assessments — the first assessments of their kind explicitly designed for English learners with the most significant cognitive disabilities. ELPA21 provides a comprehensive suite of professional learning opportunities to K–12 educators nationwide, helping them effectively use and implement student assessment results.

CRESST is deeply engaged in the study and application of learning technology.

The research has been led by Greg Chung, associate director of technology and research innovation. The work focuses on the impact of learning technologies, such as games and intelligent tutoring systems, on learning and engagement outcomes, and the design of telemetry systems for games. The research spans the evaluation of learning technologies, development of computer-based games and simulations, AI-based computational methods and more.

A recently published study by CRESST researchers Kayla Teng and Chung titled, “Measuring Children’s Computational Thinking and Problem-Solving in a Block-Based Programming Game,” uses existing data from children’s gameplay in a block-based programming game called “codeSpark Academy” to examine how children’s gameplay behavior can be used to measure their computational thinking and, more generally, their problem-solving skills.

CRESST is engaged in research in the use of artificial intelligence, driving technical innovation in learning and assessment and shaping the national research and development agenda.

“The entire educational measurement enterprise, the whole field, is feeling the impact of AI, and those in evaluation and assessment have been among the first to adopt its use in some ways. AI-assisted scoring of writing and speech samples is already readily available, and the technology shows great potential assisting in the design of assessments, reducing the time and labor needed for repetitive tasks and saving costs,” says Cai.

“We think that it is most likely going to be a foundational efficiency, a tool for improvement that, if used appropriately, can free up time for teachers to focus more on instruction and helping individual students, scale up solutions and save money, helping limited education resources to flow directly to students and teachers. It’s not going to be the only solution, but it’s going to help.”

The connection with K-12 education remains central to the mission of CRESST.

In addition to working to inform and strengthen education policy at the state level through participation in the Council of Chief State School Officers State Assessment Collaborative, CRESST provides direct assessment services to state education agencies. For example, through ELPA21 the center offers direct English language proficiency screening and summative assessment services to 11 states, assessing over 410,000 kids each year to determine their initial classification and whether they meet annual growth targets set by their state and district, enabling schools, states and districts to comply with federal requirements.

CRESST provides direct technical assistance to state education agencies—helping them respond to questions from teachers and school leaders, identify resources to improve training on the use of assessment results, and offer professional development to strengthen teachers’ understanding and effective use of assessment in instruction. It is also deeply engaged in the evaluation of educational programs.

In one major decade-long project, a team of researchers led by Jia Wang, CRESST assistant director for program evaluation, has been evaluating the effectiveness of magnet schools participating in the federal Magnet Schools Assistance Program. CRESST researchers have evaluated 40 schools, surveying faculty and students and collecting data to assess program success, conducting and publishing studies highlighting improvements in student achievement and how magnet school implementation plays major roles in magnet school effectiveness. The CRESST evaluation identified best practices for effective magnet school governance.

In a recently published paper by CRESST, “A Quasi-experimental Approach to Evaluating Magnet Schools,” magnet school evaluators document the innovative and effective quasi-experimental, mixed methods approach developed for evaluating the effectiveness of magnet schools throughout the U.S. The paper shares the evaluation research design with the intent of sharing best practices in effective magnet school evaluation with other scholars and practitioners engaged in work in similar school settings.

CRESST is expanding its work in higher education. In 2023, CRESST began partnering with the American Council on Education (ACE), to collaborate on the dissemination of data trends from its vast stores of longitudinal data collected through the Higher Education Research Institute (HERI), which had become part of CRESST in that same year, bolstering an effort to develop an online survey portal to consolidate data collection and transparency for educational researchers.

Over the five decades of its existence, CRESST has worked with a wide range of partners–from schools and universities–to state and federal agencies and other organizations–on more than 200 projects.

“In our work, we provide the numbers that keep everyone honest,” says Cai.

But it is more than that.

Cai sees the role of CRESST as pushing the envelope, to think anew, and how can we do this better, how can we learn more, and to find ways to help people understand and believe in these methods, so that they can adopt them and change.